A Smartphone App to Screen for HIV-Related Neurocognitive Impairment

Reuben N. Robbins, PhD1, Henry Brown, BSc2, Andries Ehlers, BTech2, John A. Joska, MBChB, PhD3, Kevin G.F. Thomas, PhD4, Rhonda Burgess, MBA5, Desiree Byrd, PhD, ABPP-CN5, Susan Morgello, MD5

1HIV Center for Clinical and Behavioral Studies, Columbia University and the New York State Psychiatric Center, New York, New York; 2Envisage IT, Cape Town, South Africa; 3The Department of Psychiatry and Mental Health, University of Cape Town, Cape Town, South Africa; 4ASCENT Laboratory, Department of Psychology, University of Cape Town, Cape Town, South Africa; 5The Icahn School of Medicine at Mount Sinai, New York, New York

Corresponding author: rnr2110@columbia.edu

Journal MTM 3:1:23–36, 2014

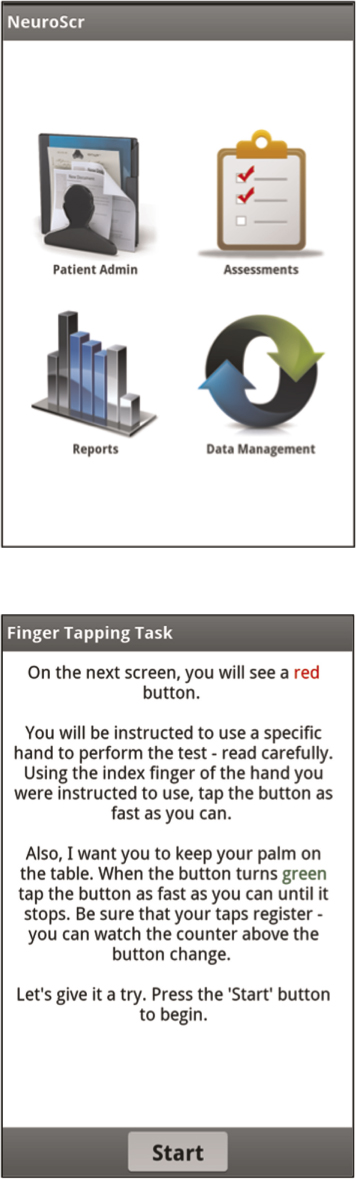

Background: Neurocognitive Impairment (NCI) is one of the most common complications of HIV-infection, and has serious medical and functional consequences. However, screening for it is not routine and NCI often goes undiagnosed. Screening for NCI in HIV disease faces numerous challenges, such as limited screening tests, the need for specialized equipment and apparatuses, and highly trained personnel to administer, score and interpret screening tests. To address these challenges, we developed a novel smartphone-based screening tool, NeuroScreen, to detect HIV-related NCI that includes an easy-to-use graphical user interface with ten highly automated neuropsychological tests.

Aims: To examine NeuroScreen’s: 1) acceptability among patients and different potential users; 2) test construct and criterion validity; and 3) sensitivity and specificity to detect NCI.

Methods: Fifty HIV+ individuals were administered a gold-standard neuropsychological test battery, designed to detect HIV-related NCI, and NeuroScreen. HIV+ test participants and eight potential provider-users of NeuroScreen were asked about its acceptability.

Results: There was a high level of acceptability of NeuroScreen by patients and potential provider-users. Moderate to high correlations between individual NeuroScreen tests and paper-and-pencil tests assessing the same cognitive domains were observed. NeuroScreen also demonstrated high sensitivity to detect NCI.

Conclusion: NeuroScreen, a highly automated, easy-to-use smartphone-based screening test to detect NCI among HIV patients and usable by a range of healthcare personnel could help make routine screening for HIV-related NCI feasible. While NeuroScreen demonstrated robust psychometric properties and acceptability, further testing with larger and less neurocognitively impaired samples is warranted.

INTRODUCTION

Neurocognitive impairment (NCI) is one of the most commonly seen complications of HIV-infection, affecting between 30% to 84% of people living with HIV (PLWH) depending on the population studied.1–3 Mild NCI is much more common than severe impairment (HIV-associated dementia) among those on antiretroviral therapy (ART) and with well-controlled viremia.1–3 The NCI associated with HIV, also known as HIV-associated neurocognitive disorder (HAND), typically affects motor functioning, attention/working memory, processing speed, and executive functioning, as well as learning and memory, which reflects cortical and subcortical brain dysfunction.1, 4,5 There are significant medical and functional consequences associated with having even mild NCI, such as increased risk of mortality (even in those receiving ART), greater likelihood of developing a more severe impairment, serious disruptions in activities of daily living, such as ART adherence, and decreased quality of life 6–14 placing many PLWH at risk for worse health outcomes. 15

Screening for NCI in HIV is essential to good comprehensive care and treatment strategies. 16, 17 Routine screening can help providers detect impairment at its very first signs, determine when to initiate and adjust ART regimens, track and monitor neurocognitive function, and educate patients in timely manner about the impact of NCI and HAND and ways to minimize it. 16, 17 Among those on or initiating ART, providers can tailor adherence strategies to minimize the impact of HAND on adherence through behavioral planning. Furthermore, if and when adjuvant pharmacotherapies or behavioral interventions become available for HAND, screening for NCI will be the first essential step to linking patients with the appropriate services. Screening for NCI among PLWH is not common, as it faces numerous challenges. 18, 19

Recognizing HIV-related NCI can be difficult. Typically, it presents as mild impairment, with no gross memory problems, or presents only by patient report, making its detection easy to overlook. 20 The currently available screening tests for HIV-related NCI were either designed to detect only the most severe form of HAND, HIV-associated dementia, have poor psychometric properties for detecting the milder forms of it, 20–23 require additional equipment, such as stopwatches, pens and pencils, test forms, and other expensive specialized testing apparatuses, 24–29 or consist of an algorithm comprised of patient medical data that does not actually measure neurocognitive functions.30 Furthermore, many screening tests typically require highly trained personnel, to administer, score and interpret – resources a busy and financially constrained health clinic may not have.

Computerized neuropsychological tests are becoming more commonplace in the detection and diagnosis of a wide range of neurocognitive disorders. 31 Newer computer technologies, like smartphones and tablets, have not been widely utilized despite being well suited to neuropsychological testing. Because of their low cost, touchscreens, network connectivity, ultra portability, various sensors, and powerful computer processing capabilities, smartphones and tablets are becoming integral components in a variety of other healthcare practices. 32 Smartphones and tablets could bring great efficiency, accuracy and interactivity to neuropsychological testing, making it more accessible and less resource intense. For example, touchscreen technology may be able to offer accurate digital analogues of widely used paper-and-pencil neuropsychological tests, like the Trail Making Test,33 that offer the added benefits of automated timing and systematic error recording. Hence, a smartphone-based screening test for NCI could help make routine screening for it acceptable and feasible. 34

To address this gap in screening tests for HIV-related NCI, in collaboration with neuropsychologists, NeuroAIDS researchers, HIV psychiatrists, potential clinical users, and software engineers, NeuroScreen was developed. NeuroScreen a software application (app) developed for smartphones using the Android operating system that takes advantage of the touchscreen technology and is designed to assess individuals across all the major domains of neurocognitive functioning most affected by HIV (processing speed, executive functioning, working memory, motor speed, learning, and memory), as well as capture other fine grained neurocognitive data, such as task errors. The neurocognitive screening test battery is embedded in a graphical user interface that automates test administration and allows for easy data management and reporting. It is completely self-contained and does not require any additional equipment (e.g., paper forms, pencils, stopwatches or specialized equipment). All tests are automatically timed, scored, and reported and do not require any hand scoring, score converting, or simultaneous and synchronized use of stopwatches. Scores are automatically calculated and recorded (providing immediate results), and timed tests are automatically timed. Administrators are forced to sequence through all of the standardized instructions, ensuring that each administration has the same set of instructions. Because NeuroScreen can run on smartphones and tablets, it is ultra-portable, can be integrated into other healthcare tools using the smartphone and tablet platform, and may allow screenings to be administered in almost any location, such as remote or rural clinics or fast-paced and busy clinics requiring flexible use of examination rooms, and by any healthcare professional.

The purpose of this study was to examine: 1) the acceptability of using NeuroScreen with an HIV+ patient population and among different potential providers; 2) NeuroScreen test construct validity; and 3) criterion validity of NeuroScreen’s ability to detect NCI as determined by the gold-standard HIV neuropsychological test battery via estimating its sensitivity. Specificity for NeuroScreen was also calculated. The sensitivity and specificity of NeuroScreen’s most optimal cut-off score was compared to the sensitivity and specificity of two other screening tests for NCI in HIV within this sample.

METHODS

Participants

Fifty HIV+ participants enrolled in the Manhattan HIV Brain Bank study (MHBB; U24MH100931, U01MH083501), a longitudinal study examining the neurocognitive and neurologic effects of HIV, were recruited. Eligibility criteria for the MHBB include being fluent in English, willing to consent to postmortem organ donation, and one of the following: have a condition indicative of advanced HIV disease or another disease without effective therapy, have a CD4 cell count of no more than 50 cells/µL for at least 3 months, or be at risk of near-term mortality in the judgment of the primary physician. All MHBB participants undergo a battery of neurologic, neuropsychological, and psychiatric examinations every 6- to 12-months while enrolled in the study. General medical information, plasma viral loads, CD4 cell counts, and antiretroviral therapy (ART) histories are obtained through patient interview, laboratory testing, and medical record review.

Participants were not eligible for the current study if they could not complete the full neuropsychological test battery from their most recent MHBB study visit or if they had peripheral neuropathy involving the upper extremities that made use of the hands and fingers excessively difficult in completing neuropsychological tests, as determined by participant self-report or annotated in the MHBB neurological assessment. A focus group of nine potential provider-users of NeuroScreen (physicians, psychologists, psychometrists, nurses, and labortory technicians) was also convened to elicit feedback on the application’s acceptability. Data were collected from May 2012 – February, 2013. The Institutional Review Boards of the Mount Sinai School of Medicine and New York State Psychiatric Institute provided approval for the conduct of this study.

Measures

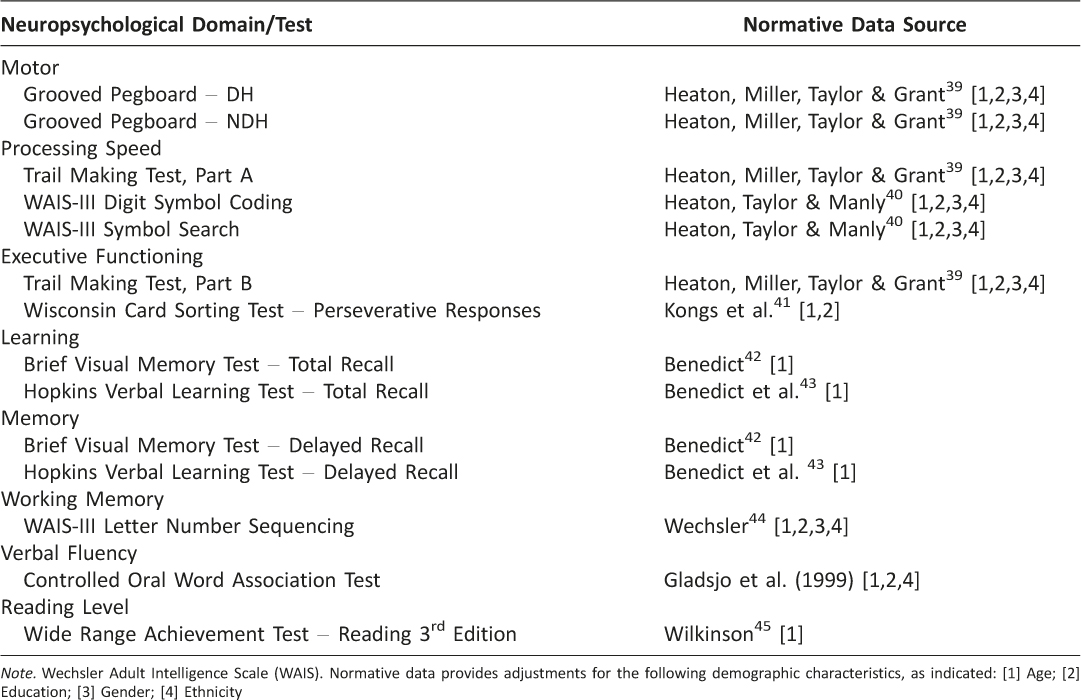

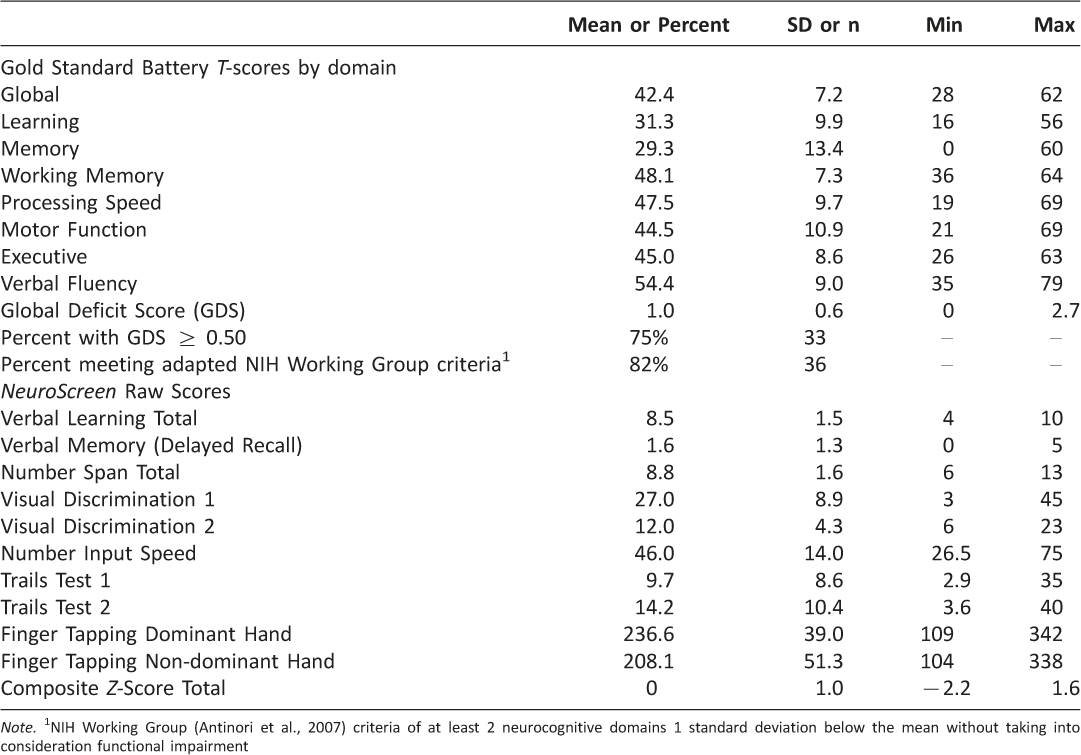

Neuropsychological evaluation All participants completed a comprehensive two-hour neuropsychological test battery as part of their participation in the MHBB study. The test battery was administered and scored by trained psychometrists using standardized procedures, and assessed the following seven domains: processing speed, learning, memory, executive functioning, verbal fluency, working memory, and motor speed (Table 1). This battery has been used in numerous studies and has been well validated to detect NCI in the context of HIV. 24, 35

Table 1: Gold-Standard Neuropsychological Test Battery

Embedded in the MHBB battery are the grooved pegboard test, Hopkins Verbal Learning Test, and WAIS-III Digit Symbol Coding test, which allowed us to compute impairment scores based on the Carey et al. (2004) mild NCI screening approach. The Carey approach uses either: 1) the HVLT learning total score and Grooved Pegboard, non-dominant hand, or 2) the HVLT learning total score and Digit Symbol coding). In each variant of the Carey approach, mild impairment is indicated if both T-scores are less than 40 or if at least one T-score is less than 35. The published sensitivities of the Carey et al. approaches are 75% for the first approach and 66% for the second. In addition, MHBB participants receive the HIV Dementia Scale21 and a score less than 11 was used as an indicator of impairment possibly indicative of HIV Dementia.

To quantify performance on the gold-standard neuropsychological battery, the Global Deficit Score (GDS) was calculated for each participant, a widely used and robust composite measure of neurocognitive functioning.24,36,37 The GDS was calculated by generating demographically-corrected T-scores for each task, which were then converted to deficit scores ranging from 0 to 5. Deficit scores were generated for each cognitive domain by averaging the T-scores for each domain specific task, such that T-scores greater than or equal to 40 had a deficit score of 0, between 39 and 35 had a deficit score of 1, between 34 and 30 had a deficit score of 2, between 29 and 25 had a deficit score of 3, between 24 and 20 had a deficit score of 4, and less than 20 = 5. A deficit score of 0 was considered normal performance, whereas a score of 5 was considered severe impairment. The GDS was an average of all 7 domain deficit scores. For the purposes of this study, the commonly used and validated GDS score of ≥ 0.5 (considered to reflect mild impairment) was used in the sensitivity and specificity analysis.37 We also adapted the criteria for diagnosing HAND by an NIH Working Group38. Individuals with two neurocognitive domains less than a T-score of 40 were considered to have NCI without regard to functional status (as the criteria require to make a diagnosis of HAND).

Smartphone neuropsychological tests

Immediately after completing the MHBB test battery or within 7-days of completing it, participants were administered NeuroScreen by a trained research staff. NeuroScreen briefly assesses individuals across six neuropsychological domains. The current version of NeuroScreen was implemented on a large format smartphone, the Samsung Galaxy Note®. The Galaxy Note has a large display of 5.3-inches (diagonal). The neuropsychological tests are embedded in a graphical user interface that allows the administrator to enter patient data, administer tests, generate instant raw results, and save raw results to an internal storage card. Though NeuroScreen has the potential to store and maintain patient records and test scores, for the purposes of this study only the participant ID and handedness were entered in the app. Once all data were transferred to the principal investigator’s secure and encrypted hard drive, all data were wiped from the device. Once started, the administrator is required to read standardized test instructions, is prompted at appropriate points to offer practice trials on selected tests, and then required to sequence through all of the tests. NeuroScreen consists of ten neuropsychological tests that assess the domains of learning, delayed recall/memory, working memory, processing speed, executive functioning, and motor speed (see Appendix A for a complete description of each test).

Standard score conversion

All raw scores were converted to Z-scores. Z-scores for tests based on completion time (Trails 1 and 2, and Number Input Speed) were reverse coded (multiplied by -1) so that higher scores indicated better (faster) performance. A composite Z- total score was computed as Z-score of the sum of all the individual test Z-scores and used as the final total score for data analysis purposes. Higher (more positive) scores indicated better performance.

Smartphone acceptability

To assess the acceptability of the smartphone format for taking neuropsychological tests, patient participants were asked about their experience using smartphones with touchscreens, and whether they owned one. They were asked to indicate which tests were the easiest and most difficult to take and how easy and difficult they found using the smartphone. They were also asked a series of open-ended questions about what it was like taking the tests on the smartphone. To assess acceptability among potential users of NeuroScreen, a small mixed group (n = 10) physicians, psychologists, psychometrists, and research assistants were gathered together in a focus-group type of format where NeuroScreen was introduced and demonstrated, and potential users had the opportunity to try using the application. Potential users were asked to offer their feedback on NeuroScreen. Written feedback notes were recorded.

Statistical Analysis

Univariate analyses were conducted to examine participant characteristics and acceptability data. Pearson correlation coefficients were computed to compare NeuroScreen tests with their closest paper-and-pencil analogue tests, as well as overall performance on the gold standard battery and NeuroScreen total score. To examine the sensitivity and specificity of various cut-off scores, two receiver operating characteristic (ROC) curves were computed – one using the GDS as the state variable where 1 indicated a GDS score of ≥ 0.5 and 0 indicated a GDS score of less than 0.5, and one using adapted NIH Working Group criteria as the state variable where 1 indicated two neurocognitive domains with T-scores of at least 1 SD below the mean. Positive predictive values and negative predictive values, as well as sensitivity and specificity were computed for the optimized NeuroScreen cut-off score, as well as for the HDS and Carey et al. screening batteries. All analyses were conducted using IBM SPSS Statistics version 20 (IBM Corp, 2011).

A total of six cases were dropped from some of the analyses because of incomplete data or invalid administrations on NeuroScreen. Three participants were not included in the correlation, ROC and sensitivity and specificity analyses. One of these was unable to appropriately follow the instructions on several tasks and refused administrator attempts to correct and ensure instructions were understood; one reported during test administration that he was recently diagnosed with cataracts and that his vision was very blurry, making the stimuli on the smartphone screen difficult to discern; the third reported very poor hearing due to an accident and complained that he could not make out some of the recorded stimuli even with the device at full volume. Another three participants were excluded from the ROC and sensitivity and specificity analyses because they had incomplete NeuroScreen test data. Of these cases, two had missing data on the finger tapping test (one from the dominant and one from the non-dominant sides) because of a software application malfunction. NeuroScreen froze twice and upon restart, these data were lost. The third participant did not have accurate Number Span forward data, as this participant was interrupted during this subtest and no total score could be calculated.

RESULTS

Sample Characteristics

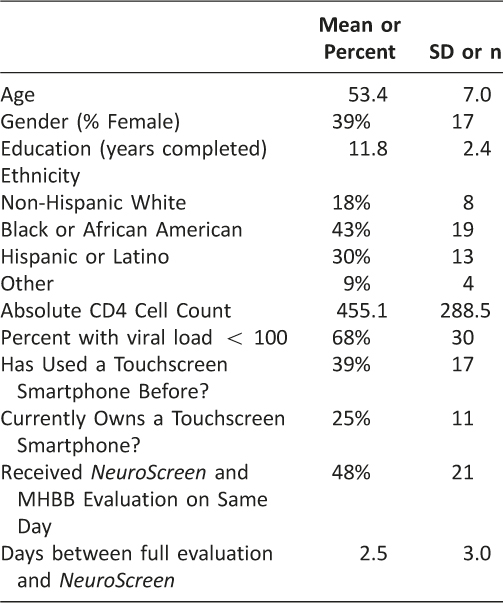

As Table 2 shows, the sample was predominantly male and African American. On average, participants were over the age of 50 and had less than 12 years of education.

Table 2: Participant Characteristics (N = 44)

Gold-Standard HIV Neuropsychological Battery Performance Results from the full neuropsychological battery (Table 3) indicated that on average, participants had a global T-score of 42.4 (SD = 7.2). On average, the domains of working memory, processing speed, motor function, executive function, and verbal fluency had T-scores above 40. The domain T-scores of learning and memory were, on average, less than 40. The mean GDS was 1.0 (SD = 0.6), indicating that a majority of the sample had NCI. Classifying participants as impaired using the GDS score (≥0.5 indicates impairment) indicated that 75% (n = 33) of the sample was impaired. Using the adapted NIH Working Group neurocognitive criteria to classify participants as impaired indicated that 82% (n = 36) of the sample was impaired.

Table 3: Neuropsychological Test Performance

NeuroScreen Performance Table 3 displays raw scores for all NeuroScreen tests, as well as the composite Z-score total for the sample. On average, participants were able to learn just over 8 words across two learning trials (5 words per trial) and recall almost 2 words after a 5-minute delay. The mean total number span backwards and forwards was just about 9 (maximum possible = 17). The mean total correct responses on Visual Discrimination 1 was just about 27 (maximum possible = 61), and 12 on Visual Discrimination 2 (maximum possible = 150). The mean completion time on Number Input Speed was 46 seconds. Mean completion times for Trails Test 1 was just under 10 seconds and just over 14 seconds for Trails Test 2. The mean total finger taps for the dominant hand was just about 236 across 5 trials and about 208 taps across 5 trials for the non-dominant hand. An independent samples t-test was computed to compare total performance (composite Z-score total) between those who received NeuroScreen on the same day as the MHBB battery and those who completed it on a later date. No difference was found.Acceptability

Sixty-one percent (n = 27) of participants reported never having used a smartphone with a touchscreen before. Among the 39% (n = 17) who had used a smartphone before, 25% (n = 11) reported owning a smartphone with a touchscreen. Ninety-three percent of the sample (n = 41) reported feeling “Very comfortable” to “Comfortable” using the study smartphone. Seventy-three percent of the sample (n = 32) reported that the study phone was “Easy” to “Very easy” to use. Only two participants reported that the phone was “Somewhat difficult,” and “Very difficult” to use.

When asked which tests were the most difficult to use on the smartphone, 9% (n = 4) reported none; 30% (n = 13) reported the Trail Making tests; 84% (n = 37) reported the Number Input test, 18% (n = 8) reported Visual Discrimination 1, 25% (n = 11) reported Visual Discrimination 2, and 32% (n = 14) reported Finger Tapping test. When asked which tests were the easiest to use on the smartphone, 84% (n = 37) reported the Trail Making tests, 36% (n = 16) reported the Number Speed input test, 96% (n = 42) reported Visual Discrimination 1, 84% (n = 37) reported Visual Discrimination 2, and 84% (n = 37) reported Finger Tapping. On open-ended questions, participants overall reported that they did not have any problems with the tests, they enjoyed the format of the touchscreen, found the instructions easy to follow, and indicated that they would not mind receiving a similar screening test during regular HIV care medical visits.

Feedback from the focus group-type format of potential provider users, indicated generally overall positive feedback and interest in it. Providers believed that it would make a useful additional to clinical care and was a tool they thought they would incorporate into their practices. Several providers indicated that a tablet version might be easier to use, more acceptable by providers and more easily integrated into care routines.

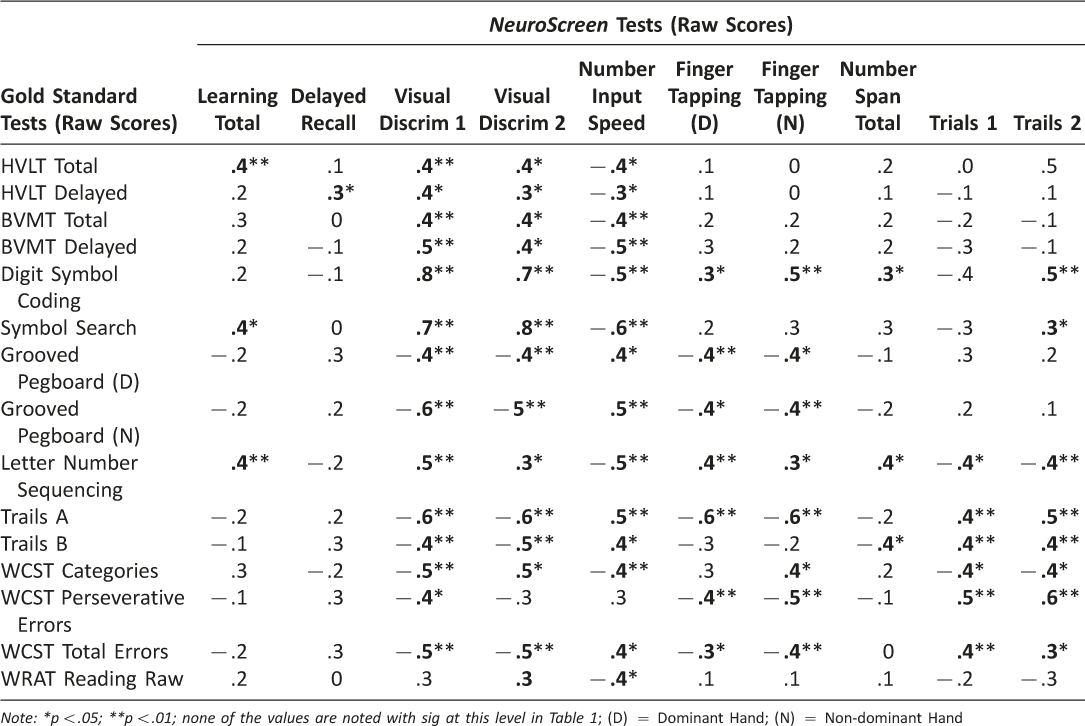

Construct Validity

To examine convergent validity between the NeuroScreen tests and the gold-standard tests, Pearson correlation coefficients were computed for each NeuroScreen test and a corresponding analogue test or tests from the larger battery measuring the same neurocognitive domain. Table 4 displays the correlation matrix between NeuroScreen and gold-standard tests. Moderate to strong and statistically significant correlations between the NeuroScreen and gold-standard tests were observed for verbal learning, delayed recall of word items, processing speed, motor functioning, attention/working memory, and executive functions. The NeuroScreen tests of processing speed were moderately to strongly correlated with all of the gold-standard tests.

Table 4: Correlations Between NeuroScreen and the Gold Standard Tests (N = 44)

Global functioning The total NeuroScreen composite Z-score was significantly, positively correlated with the global T-score (r(44)=.61, p< .01) and significantly, negatively correlated with the GDS score (r(44)=−.59, p< .01) from the gold-standard test battery, such that better performance on NeuroScreen was significantly related to better overall performance on the full neuropsychological test battery.

Criterion Validity

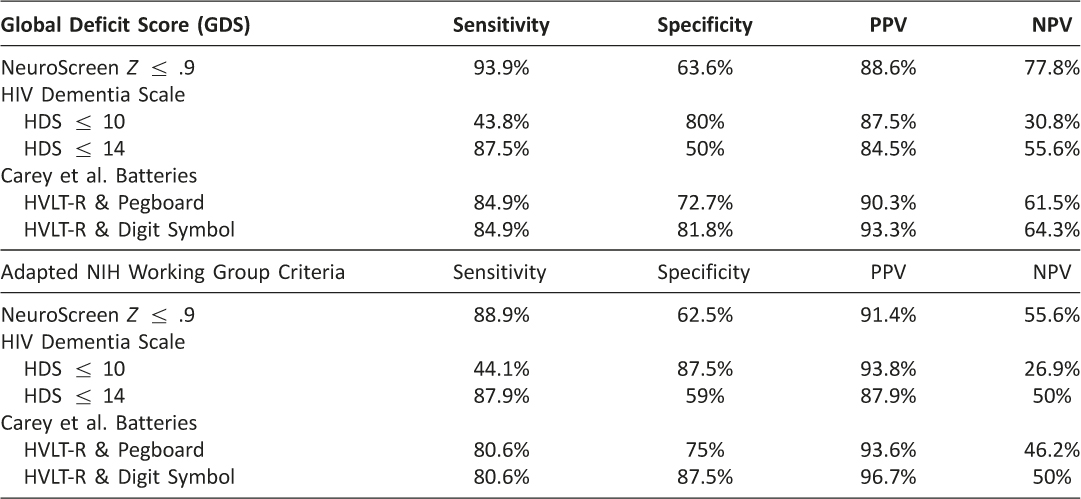

To determine how well NeuroScreen can detect NCI, useful cut-off scores for were identified via a ROC analyses. ROC curves were computed for the NeuroScreen mean composite Z-score total when using either the GDS or the adapted NIH Working Group neurocognitive criteria to determine impairment. The area under the curve when using the GDS criteria was 82%, and 76% using the NIH Working Group criteria. We computed the Youden index (Sensitivity minus 1-Specificity) for each cut-off score to establish the best combination of sensitivity and specificity for all possible composite Z-scores using the GDS and NIH Working Group diagnostic criteria. The highest Youden index score using the GDS criteria was .6, which corresponded to a cut-off score of .9 or less. Using this as the cut-off score yielded 94% sensitivity, 64% specificity, 89% positive predictive value and 78% negative predictive value (Table 5). There were 4 false positives and 2 false negatives. The highest Youden index score using the adapted NIH Working Group criteria was .5, which also corresponded to a Z-score cut-off of .9 or less. Using this as the cut-off score yielded 89% sensitivity, 63% specificity, 91% positive predictive value and 56% negative predictive value (Table 5). There were 3 false positives and 4 false negatives.

Table 5: Sensitivity and Specificity for Screening Tests Calculated from Study Sample (N = 44)

Table 5 indicates the sensitivities and specificities for NeuroScreen, the HIV Dementia Scale (HDS; using the cut score of ≤10 and ≤14 indicating impairment), and the Carey et al. (2004; where a T-score of 1 SD below the mean on both Digit Symbol and Grooved Pegboard Non-dominant hand or 2 SDs below the mean on one of those tests indicated at least mild impairment) against the GDS and adapted NIH working group criteria determination of NCI based on the gold-standard neuropsychological test battery. Both NeuroScreen scores had better sensitivity than either the HDS or the Carey et al. approach. NeuroScreen also had better specificity than the HDS, though compared to the Carey et al. approach, it was slightly worse.

DISCUSSION

In a sample of HIV-infected individuals, a computerized neuropsychological screening test battery designed for a smartphone running the Android operating system (NeuroScreen) was found to be acceptable by its users and administrators. Moderate to strong correlations were found between all of NeuroScreen’s tests and gold-standard paper-and-pencil neuropsychological tests measuring the same neurocognitive domains. Overall performance on NeuroScreen significantly correlated with global performance on the gold-standard neuropsychological test battery.

Sensitivity and specificity analyses indicated that optimized cut-off scores on NeuroScreen based on both the GDS and NIH working group criteria had robust sensitivity (93.9% and 88.9%, respectively) and moderate specificity (63.6% and 62.5%, respectively) in detecting NCI among a sample of HIV patients. Neuroscreen shows promise as an easy-to-use screening test for the neurocognitive impairment associated with HIV, including mild impairment. While NeuroScreen’s specificity was not as high as it’s sensitivity, it is interesting to note that among the three false positives, all self-reported as “definitely” having at least one neurocognitive complaint (e.g., more trouble remembering things than usual, feeling slower when thinking and planning, and difficulties paying attention and concentrating) with one participant reporting three complaints. It could be that the gold-standard battery or GDS algorithm was not sensitive to these participants’ impairment, and that NeuroScreen was able to detect impairment.

In the context of screening for NCI the medical and financial consequences must be weighed for both screening and full neuropsychological evaluations. Because HIV-related NCI is so prevalent, conducting a gold-standard neuropsychological evaluation on every infected person would be ideal. However, it would be prohibitive and unfeasible for many clinical settings. Overburdened and under resourced health clinics most likely do not have time, staff and financial resources to conduct full evaluations on all of their HIV patients. Furthermore, most clinics do not have staff neuropsychologists. Hence, a screening tool that is easy-to-use that can help clinics determine which patients are most likely to have HIV-related NCI can help clinics make better referrals and use their limited resources more wisely by only fully evaluating those patients at highest risk for having NCI. Thus, while NeuroScreen had lower specificity than sensitivity, the lower specificity may be acceptable in such circumstances. Moreover, we note that the positive predictive value (PPV) and negative predictive value (NPV) are both fairly robust, 88.6% and 77.8%, respectively, which are also equally important properties of screening tests.47, 48 Finally, we would like to stress that NeuroScreen is a screening tool and we do not believe it should replace gold-standard neuropsychological evaluations, nor should it undermine the value of the clinician-patient interaction.

While we have provided some preliminary evidence for NeuroScreen’s construct validity (the computerized tests in NeuroScreen measure the same cognitive abilities those from the gold-standard battery), and the app’s criterion validity (i.e., NeuroScreen is capable of detecting NCI in HIV patients), it is important to note that this study has limitations. First, we did not collect any normative data and hence do not know how the NeuroScreen tests will perform among individuals without HIV. Second, we had a small sample with most individuals having NCI. When we calculated the 90% confidence interval (CI) of proportions for NeuroScreen’s sensitivity with this sample size, we obtain CI .71 to .93. Though fairly wide, even with this small sample size, we can estimate that the true sensitivity lies within this CI and is, one the low end, almost as sensitive as the Carey et al. (2004) screening batteries. Hence, these findings need to be replicated in a larger sample with less neurocognitive impairment. Third, without normative data and/or a more neurocognitively heterogeneous group it is hard to establish how HIV-infected individuals without any neurocognitive impairment would perform. Finally, the MHBB battery has several tests that assess cognitive domains NeuroScreen does not have and/or assess (reading, verbal fluency and perseveration), which are important domains to assess when evaluating an individual’s neuropsychological functioning. Nonetheless, the tests in NeuroScreen were chosen because they assess the cognitive domains most likely to be affected by HIV. Furthermore, NeuroScreen is not meant to be a substitute for a thorough neuropsychological evaluation.

Despite these limitations, we believe NeuroScreen and other mobile operating system-based testing tools will offer busy health clinics with an affordable, easy-to-use solution to screening for HIV-related NCI, as well as NCI related to other disease processes. This could help make better referrals, better tracking, integration with electronic medical records. NeuroScreen shows promise as an easy-to-use and accurate screening tool for mild NCI among PLWH. More research needs to be conducted to replicate these findings with a larger, non-convenience sample before NeuroScreen can be widely used. Nonetheless, with the possibility of making NeuroScreen widely available, routine screening for NCI may become more viable and hopefully help the lives of those with NCI and HIV.

CONCLUSION

Mobile technology is transforming clinical practice for healthcare providers of all types. Mobile technologies offer powerful tools that are ultra-portable and easy-to-use. This study demonstrated that a smartphone based screening test for HIV-related NCI was easy-to-use and acceptable to use by patients and providers. Furthermore, evidence for construct validity of the tests embedded in the application was found, as was criterion validity or the test’s ability to detect NCI. Taking advantage of mobile platforms and automating many components of the neuropsychological testing processes may help to make testing more accurate, efficient, affordable, and accessible to those who need testing

Acknowledgements

This research was supported by grants from the National Institute of Mental Health to the HIV Center for Clinical and Behavioral Studies at NY State Psychiatric Institute and Columbia University (P30-MH43520; Principal Investigator: Anke A. Ehrhardt, Ph.D.), as well as to the Manhattan HIV Brain Bank at the Mount Sinai School of Medicine (U01MH083501 and U24MH100931; Principal Investigator: Susan Morgello, M.D.).

References

1. Grant I, Sacktor NC. HIV-Associated Neurocognitive Disorders. In: Gendelman HE, Grant I, Everall IP, et al., (eds.). The Neurology of AIDS. 3rd ed. New York: Oxford University Press; 2012:488–503.

2. Simioni S, Cavassini M, Annoni J-M, et al. Cognitive dysfunction in HIV patients despite long-standing suppression of viremia. AIDS. 2010;24(9):1243–50 1210.1097/QAD.1240b1013e3283354a3283357b.

3. Heaton RK, Clifford DB, Franklin DR, et al. HIV- associated neurocognitive disorders persist in the era of potent antiretroviral therapy: CHARTER Study. Neurology. 2010;75:2087–96. ![]()

4. Grant I. Neurocognitive disturbances in HIV. International Review of Psychiatry. 2008;20(1):33–47. ![]()

5. Heaton RK, Franklin D, Ellis R, et al. HIV-associated neurocognitive disorders before and during the era of combination antiretroviral therapy: differences in rates, nature, and predictors. Journal of NeuroVirology. 2011;17(1):3–16. ![]()

6. Gorman A, Foley J, Ettenhofer M, Hinkin C, van Gorp W. Functional Consequences of HIV-Associated Neuropsychological Impairment. Neuropsychology Review. 2009;19(2):186–203. ![]()

7. Heaton RK, Marcotte TD, Mindt MR, et al. The impact of HIV-associated neuropsychological impairment on everyday functioning. Journal of the International Neuropsychological Society. May 2004;10(3):317–31. ![]()

8. Hinkin CH, Castellon SA, Durvasula RS, et al. Medication adherence among HIV+adults: Effects of cognitive dysfunction and regimen complexity. Neurology. Dec 2002;59(12):1944–50. ![]()

9. Vivithanaporn P, Heo G, Gamble J, et al. Neurologic disease burden in treated HIV/AIDS predicts survival. Neurology. September 28, 2010;75(13):1150–8. ![]()

10. Ettenhofer ML, Foley J, Castellon SA, Hinkin CH. Reciprocal prediction of medication adherence and neurocognition in HIV/AIDS. Neurology. April 13, 2010;74(15):1217–22. ![]()

11. Ettenhofer ML, Hinkin CH, Castellon SA, et al. Aging, neurocognition, and medication adherence in HIV infection. The American journal of geriatric psychiatry: official journal of the American Association for Geriatric Psychiatry. 2009;17(4):281. ![]()

12. Hinkin CH, Hardy DJ, Mason KI, et al. Medication adherence in HIV-infected adults: effect of patient age, cognitive status, and substance abuse. AIDS (London, England). 2004;18(Suppl 1):S19. ![]()

13. Tozzi V, Balestra P, Galgani S, et al. Neurocognitive performance and quality of life in patients with HIV infection. AIDS Research and Human Retroviruses. 2003;19(8):643–52. ![]()

14. Tozzi V, Balestra P, Murri R, et al. Neurocognitive impairment influences quality of life in HIV-infected patients receiving HAART. International Journal of STD & AIDS. April 1, 2004;15(4):254–9. ![]()

15. Mannheimer S, Friedland G, Matts J, Child C, Chesney M. The consistency of adherence to antiretroviral therapy predicts biologic outcomes for human immunodeficiency virus-infected persons in clinical trials. Clin Infect Dis. 2002;34(8):1115–21. ![]()

16. Cysique LA, Bain MP, Lane TA, Brew BJ. Management issues in HIV-associated neurocognitive disorders. Neurobehavioral HIV Medicine. 2012;4:63–73. ![]()

17. The Mind Exchange Working Group. Assessment, Diagnosis, and Treatment of HIV-Associated Neurocognitive Disorder: A Consensus Report of the Mind Exchange Program. Clinical Infectious Diseases. 2013:in press. Epub ahead of print retrieved February 11, 2013, from http://cid.oxfordjournals.org/content/early/2013/2001/2010/cid.cis2975.full.pdf+html.

18. McArthur JC, Brew BJ. HIV-associated neurocognitive disorders: is there a hidden epidemic? AIDS. 2010;24(9):1367–70 ![]()

19. Schouten J, Cinque P, Gisslen M, Reiss P, Portegies P. HIV-1 infection and cognitive impairment in the cART era: a review. AIDS. 2011;25(5):561–75 ![]()

20. Valcour V, Paul R, Chiao S, Wendelken LA, Miller B. Screening for Cognitive Impairment in Human Immunodeficiency Virus. Clinical Infectious Diseases. October 15, 2011;53(8):836–42. ![]()

21. Power C, Selnes OA, Grim JA, McArthur JC. HIV Dementia Scale: A Rapid Screening Test. JAIDS Journal of Acquired Immune Deficiency Syndromes. 1995;8(3):273–8. ![]()

22. Sacktor NC, Wong M, Nakasujja N, et al. The International HIV Dementia Scale: A new rapid screening test for HIV dementia. AIDS. Sep 2005;19(13):1367–74.

23. Bottiggi KA, Chang JJ, Schmitt FA, et al. The HIV Dementia Scale: Predictive power in mild dementia and HAART. Journal of the Neurological Sciences. 2007;260(1–2):11–5. ![]()

24. Carey CL, Woods SP, Rippeth JD, et al. Initial validation of a screening battery for the detection of HIV-associated cognitive impairment. The Clinical neuropsychologist. 2004;18(2):234–48. ![]()

25. Koski L, Brouillette MJ, Lalonde R, et al. Computerized testing augments pencil-and-paper tasks in measuring HIV-associated mild cognitive impairment*. HIV Medicine. 2011;12(8):472–80. ![]()

26. Marra CM, Lockhart D, Zunt JR, Perrin M, Coombs RW, Collier AC. Changes in CSF and plasma HIV-1 RNA and cognition after starting potent antiretroviral therapy. Neurology. April 22, 2003;60(8):1388–90. ![]()

27. Moore DJ, Roediger MJP, Eberly LE, et al. Identification of an Abbreviated Test Battery for Detection of HIV-Associated Neurocognitive Impairment in an Early-Managed HIV-Infected Cohort. PLoS ONE. 2012;7(11):e47310. ![]()

28. Smurzynski M, Wu K, Letendre S, et al. Effects of Central Nervous System Antiretroviral Penetration on Cognitive Functioning in the ALLRT Cohort. AIDS. 2011;25:357–65. ![]()

29. Wright EJ, Grund B, Robertson K, et al. Cardiovascular risk factors associated with lower baseline cognitive performance in HIV-positive persons. Neurology. September 7, 2010;75(10):864–73. ![]()

30. Cysique LA, Murray JM, Dunbar M, Jeyakumar V, Brew BJ. A screening algorithm for HIV-associated neurocognitive disorders. HIV Medicine. 2010; 11(10):642–9. ![]()

31. Schatz P, Browndyke J. Applications of Computer- based Neuropsychological Assessment. The Journal of Head Trauma Rehabilitation. 2002;17(5):395–410. ![]()

32. Istepanian RSH, Jovanov E, Zhang YT. Guest Editorial Introduction to the Special Section on M- Health: Beyond Seamless Mobility and Global Wireless Health-Care Connectivity. Information Technology in Biomedicine. IEEE Transactions on. 2004;8(4):405–14.

33. Reitan RM. Validity of the Trail Making test as an indicator of organic brain damage. Perceptual and Motor Skills. 1958;8:271–6.![]()

34. Robbins RN, Remien RH, Mellins CA, Joska J, Stein D. Screening for HIV-associated dementia in South Africa: The potentials and pitfalls of task-shifting. AIDS Patient Care and STDs. 2011;25(10):587–93.![]()

35. Heaton RK, Grant I, Butters N, White DA, et al. The HNRC 500: Neuropsychology of HIV infection at different disease stages. Journal of the International Neuropsychological Society. May 1995;1(3):231–51. ![]()

36. Blackstone K, Moore DJ, Heaton RK, et al. Diagnosing Symptomatic HIV-Associated Neurocognitive Disorders: Self-Report Versus Performance- Based Assessment of Everyday Functioning. Journal of the International Neuropsychological Society. 2012;18(01):79–88.![]()

37. Carey CL, Woods SP, Gonzalez R, et al. Predictive Validity of Global Deficit Scores in Detecting Neuropsychological Impairment in HIV Infection. Journal of Clinical and Experimental Neuropsychology. 2004;26(3):307–19. ![]()

38. Antinori A, Arendt G, Becker JT, et al. Updated research nosology for HIVassociated neurocognitive disorders. Neurology. 2007;69:1789–99. ![]()

39. Heaton RK, Miller SW, Taylor MJ, Grant I. Revised Comprehensive Norms for an Expanded Halstead- Reitan Battery: Demographically Adjusted Neuropsychological Norms for African American and Caucasian Adults. Lutz, FL: Psychological Assessment Resources, Inc; 2004.

40. Heaton RK, Taylor M, Manly J, Tulsky D, Chelune GJ, Ivnik I, et al. Clinical Interpretations of the WAIS-II and WMS-III. San Diego, CA: Academic Press; 2001. Demographic effects and demographically corrected norms with the WAIS-III and WMS-III; pp. 181–210

41. Kongs, SK, Thompson, LL, Iverson, GL, & Heaton, R K. WCST-64: Wisconsin Card Sorting Test-64 Card Version Professional Manual. Odessa, FL: Psychological Assessment Resources; 2000.

42. Benedict RH, Schretlen D, Groninger L, Dobraski M, Shpritz B. Revision of the Brief Visuospatial Memory Test: Studies of normal performance, reliability, and validity. Psychological Assessment. 1996;8(2):145–53. ![]()

43. Benedict RH, Schretlen D, Groninger L, Brandt J. Hopkins Verbal Learning Test-Revised: Normative data and analysis of inter-form and test-retest reliability. Clinical Neuropsychologist. 1998;12(1):43–55. ![]()

44. Wechsler, D. Wechsler Adult Intelligence Scale (3rd ed.). San Antonio, TX: Psychological Corporation; 1997.

45. Wilkinson, GS. Wide Range Achievement Test (3rd ed.) Wilmington, DE: Wide Range; 1993.

46. Gladsjo JA, Schuman CC, Evans JD, Peavy GM, Miller SW, Heaton RK. Norms for letter and category fluency: Demographic corrections for age, education, and ethnicity. Assessment. 1999;6(2):147–78. ![]()

47. Evans MI, Galen RS, Britt DW. Principles of Screening. Seminars in Perinatology. 2005;29(6): 364–6. ![]()

48. Grimes DA, Schulz KF. Uses and abuses of screening tests. The Lancet. 2002;359(9309):881–4. ![]()

Appendix

NeuroScreen tests

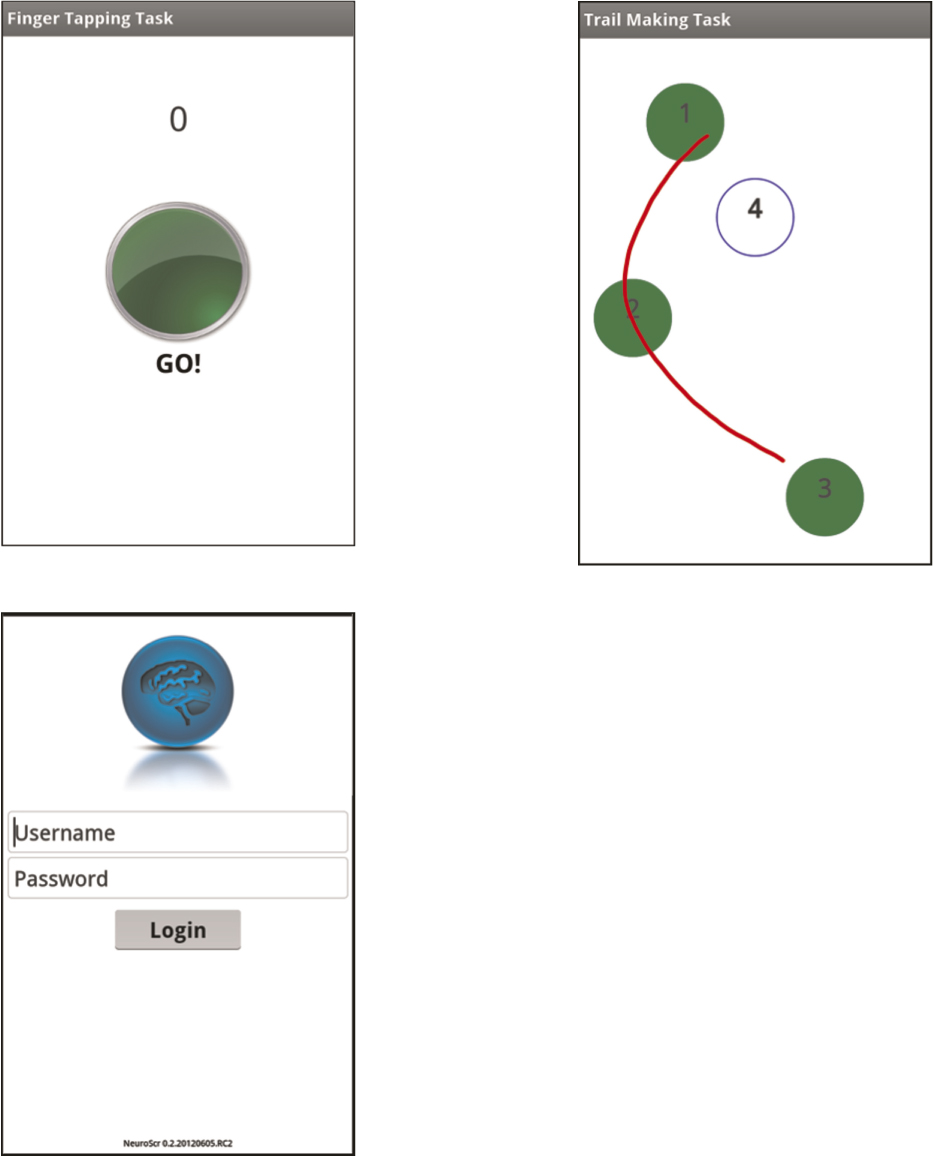

Learning and memory. Verbal learning and delayed memory are assessed via a 5-item word list with two learning trials and a 5-minute delayed recall. Words are prerecorded and played via the smartphone speaker. Every administration of the NeuroScreen word list is exactly the same – each word is spoken at a 2-second interval in a clear, enunciated male voice. After the words are played, the patient is asked to say the words back in any order. The test administrator, viewing the screen, sees buttons with the five words from the list, as well as an “other” button. The administrator taps the buttons that correspond to the words the patient says. In the case of an intrusion, the administrator taps the “other” button. Learning is scored by totaling the number of correctly recalled words across both learning trials. The minimum score is 0 and the maximum score is 10.

The delay recall test automatically gets queued to be administered approximately 5-minutes after the last learning trial is completed. The time limit is approximate because if it is reached during another test, NeuroScreen will not interrupt the currently administered test. Rather, the program waits for the current test to be completed and then forces the administrator to complete the delayed recall trial. The administrator reads the instructions to the patient to say as many words that can be remembered from the list. The administrator taps the buttons that correspond to the words the patient says. In the case of an intrusion, the administrator taps the “other” button. Delayed recall is scored by totaling the number of correctly recalled words. The minimum score is 0 and the maximum score is 5.

Working memory. Working memory is assessed via a number span test (forwards and backwards). Participants hear pre-recorded digit strings starting with a string of 3 digits with a max of 9 digits. Each number of each string is spoken at a 1-second interval in a clear, enunciated male voice. If participants do not get the number span correct, they are given another trial of the same span. After two incorrect responses, the task moves on to the number span backwards portion. The backwards span begins with a sequence of 3 digits and has a maximum of 8. Like the forwards test, participants get two trials per sequence, but if they get both incorrect, the test ends. The test records the longest forwards and backwards span repeated and is scored by summing the number of digits in each of those spans. For example, if the longest forward span correctly repeated had 6 digits and the longest backwards span correctly repeated had 4 digits, the score for this test would be 10.

Processing speed. Processing speed is assessed by two timed visual discrimination tasks, as well as a number input test. The first visual discrimination task requires patients to match a target shape to its correct number by tapping the number on the screen. This task is similar to Digit Symbol Coding of the WAIS-III (Wechsler, 1997) and Symbol Digit Modalities (Smith, 1982). The second task requires patients to determine if one of two symbols matches an array of symbols and is similar to the Symbol Search subtest of the WAIS-III (Wechsler, 1997). Both tests lasts 45-seconds and participants receive a practice trial with feedback. The first discrimination task has a total of 61 items. The second discrimination task has a total of 150 items. Each test is scored by summing the total number of correctly answered items.

On the number input test, participants see a keypad on the screen and a target number. They are asked to enter the target number as quickly as possible. Participants see the target numbers turn green as they enter the correct numbers. If an incorrect number is pressed, the corresponding number in the target number turns red and the participant must correct it by using a back button. After a target number is entered correctly, they move on to a longer number. The test starts with a five digit number and proceeds in one digit increments up to a ten digit number. Participants must complete all six trials. The smartphone records the completion time for each trial, as well as the number of errors made while inputting the number. Participants receive a practice trial to become familiar with the keypad. This test is scored by summing the completion times (in seconds) for each of the five trials. The maximum completion time allowed is 75-seconds.

Motor speed. Motor speed is assessed via a finger tapping test. Patients have to tap a virtual button on the screen as fast as they can. Each trial lasts 10-seconds. Participants have three trials with their dominant hands, three trials with their nondominant hands, then two more trials with the dominant, then nondominant hands. Handedness is entered into the patient information section of NeuroScreen and the patient is automatically presented with trials based on their handedness. This test is scored by summing the total number of taps completed by each hand across the 5 trials.

Executive functioning. Executive functioning is assessed via a trail making type test similar to the Trail Making Test Parts A and B (Partington & Leiter, 1949; Reitan, 1958). Trail 1 has users use their finger to draw a line between numbered circles (1 – 8). The smartphone automatically times how long it takes to complete the trial, as well as systematically records any errors. If an error is made, users see a pop-up screen telling them to go back to the last correct circle. The test is discontinued at 35-seconds with all discontinued tests recorded as the maximum completion time. Trail 2 requires users to draw a line between numbered and lettered circles in an ascending order (letter, number, letter, number, etc.) The smartphone automatically times how long it takes to complete, as well as records any errors. Preceding each trial, users are given an abbreviated practice test. Scores for this test are completion times (in seconds). The test is discontinued at 40-seconds with all discontinued tests recorded as the maximum completion time.