The Future of Automated Mobile Eye Diagnosis

Cassie A. Ludwig, BS1, Mia X. Shan, BS, BAH1, Nam Phuong H. Nguyen1, Daniel Y. Choi, MD1, Victoria Ku, BS1, Carson K. Lam, MD1

1Byers Eye Institute, Stanford University School of Medicine 2405 Watson Drive, Palo Alto, CA, USA 94305

Corresponding Author: carsonl@stanford.edu

Journal MTM 5:2:44–50, 2016

doi: 10.7309/jmtm.5.2.7

The current model of ophthalmic care requires the ophthalmologist’s involvement in data collection, diagnosis, treatment planning, and treatment execution. We hypothesize that ophthalmic data collection and diagnosis will be automated through mobile devices while the education, treatment planning, and fine dexterity tasks will continue to be performed at clinic visits and in the operating room by humans. Comprehensive automated mobile eye diagnosis includes the following steps: mobile diagnostic tests, image collection, image recognition and interpretation, integrative diagnostics, and user-friendly, mobile platforms. Completely automated mobile eye diagnosis will require improvements in each of these components, particularly image recognition and interpretation and integrative diagnostics. Once polished and integrated into greater medical practice, automated mobile eye diagnosis has the potential to increase access to ophthalmic care with reduced costs, increased efficiency, and increased accuracy of diagnosis.

Introduction

Current Model of Eye Care Data Collection

In a typical eye care clinic visit, a combination of an ophthalmic technician, nurse, optometrist, orthoptist, and/or ophthalmologist takes an ocular history then measures the patient’s visual acuity, intraocular pressure, pupil shape and diameter, presence of relative afferent pupillary defect, and the range of extraocular movements in each eye. Tools used include a slit lamp to view the external eye, anterior, and posterior chamber, a gonioscope to measure the iridocorneal angle, and a direct and/or indirect ophthalmoscope to view the fundus. If necessary, additional testing includes marginal reflex distance and levator function measurement, color vision testing, and optical coherence tomography, among others.

Once a diagnosis is made, a physician helps the patient decide between different treatment options, and provides education, medication, and/or a procedure as treatment.

Future Model of Eye Care Data Collection

With the dramatic rise in demand for ophthalmic care relative to the small increase in ophthalmologists, how can ophthalmologists continue to increase access to care? We hypothesize that ophthalmic diagnosis will be automated through mobile devices, with the help of support staff for education, treatment planning, and fine dexterity tasks. Transitioning to mobile as opposed to current fixed forms of diagnosis will increase accessibility to ophthalmic services, particularly in low-resource settings. There are 2.6 billion smartphone subscriptions today and 81% of health care professionals owned a smartphone in 2010—making the smartphone a highly accessible platform for automated diagnosis throughout the world.1,2 The result of the delegation of diagnosis to mobile devices may allow for faster, remote, low-cost diagnosis and disease monitoring.

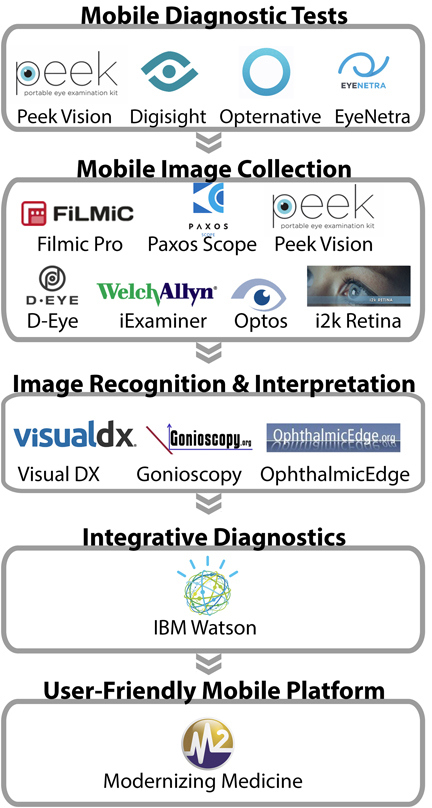

Automated mobile eye diagnosis will require the integration of data captured from mobile software and hardware technology to create evidence-based algorithms that formulate patient-specific, actionable recommendations. Comprehensive automated mobile eye diagnosis includes the following: mobile diagnostic tests, mobile image collection, image recognition and interpretation, integrative diagnostics, and user-friendly mobile platforms (Figure 1).

Figure 1: The Pathway of Automated Mobile Eye Diagnosis. Existing hardware and software that may be incorporated into a future comprehensive diagnostic system.

Mobile Diagnostic Tests

Mobile software applications that test visual acuity and visual fields include Peek Vision and SightBook.3,4 Refraction testing can be done on a computer screen through Opternative and Eye Netra.5,6 Peek Vision is also developing software capable of color and contrast testing.3 However, a comprehensive mobile exam is still limited by the lack of accurate mobile software and/or hardware that detect necessary values such as pupillary response, extraocular movements, and iridocorneal angle.

Mobile Image Collection

Direct visualization of eye pathology through slit lamp examination with or without additional fundoscopic imaging can be critical in narrowing the differential diagnosis.7 Ophthalmologists have already developed several methods of visualizing both the anterior and posterior chambers with mobile phones. A simple method of fundus imaging described by Haddock et al. involves the use of a smartphone and 20D lens with or without the Filmic Pro application to control the camera’s illumination (Cinegenix LLC, Seattle, WA, USA).8

Supplemental hardware that facilitates image capture from mobile devices includes the PAXOS scope (Digisight Technologies, Portola Valley, CA, USA; licensed from inventors of the EyeGo Smartphone imaging adapter), Peek Vision, D-EYE (D-EYE, Padova, Italy), and the iExaminer™ System (Welch Allyn, Doral, FL, USA).3,9–13 The PAXOS scope is a combined anterior and posterior segment mobile imaging system with a built-in variable intensity light source and posterior adapter that can accommodate various indirect lenses. It provides a 56-degree static field of view. Similarly, Peek Vision includes a low-cost adapter clip with optics blanks that re-route light from the flash to the retina.3 The same adapter, coupled with supporting Peek Vision software, allows for grading of cataract severity and retinal imaging. D-EYE provides a 5 to 8-degree field of view in an undilated pupil, and up to a 20-degree field of view if moved as close to the anterior segment as possible.11 The iExaminer™ System provides a 25-degree field of view in an undilated eye.13 The system requires the Welch Allyn PanOptic™ Ophthalmoscope, iExaminer Adapter fit to an iPhone 4 or 4S (Apple Inc., Cupertino, CA, USA), and the iExaminer App.13

Images taken by these devices are limited by mobile phone camera resolution and field of view, the latter being affected by the presence or absence of pupil dilation. Additionally, images that are unable to be graded due to user error or patient pathology (e.g. cataracts obscuring views of the posterior chamber) could be detrimental to the care of patients in systems that rely solely on mobile imaging. A recent study comparing the iExaminer™ System to standard fundus photography devices found lower image resolution and longer time required to take images using the smartphone setup.14 However, a study comparing smartphone ophthalmoscopy with the D-EYE device to dilated retinal slit-lamp examination found exact agreement between the two methods in 204 of 240 eyes on grade of diabetic retinopathy.15 Notably the latter study was performed with an iPhone 5 (8-megapixel iSight camera) and the former with an iPhone 4 (5-megapixel still camera). Enhanced mobile imaging hardware will continue to improve the resolution,16 and wider-field imaging can be provided by pupil dilation (requiring a technician or nurse), laser scan imaging (Optos PLC, Marlborough, MA) or by integration with software that patches retina images together into a mosaic, such as i2k Retina software (DualAlign LLC, Clifton Park, New York, USA).17 User error can be overcome with increasing experience with mobile imaging. However, patient pathology that obscures views of the posterior chamber will ultimately limit mobile diagnosis.

Furthermore, current mobile imaging does not replace the scleral depressed indirect ophthalmoscopic exam that allows stereoscopic views of the indented retina anteriorly beyond the peripheral retina to the ora serrata and pars plana. This capability would be needed for evaluation of flashes or floaters—a common presentation in which the diagnosis of retinal tear, hole, or detachment must be ruled out over multiple visits.

Another limitation in mobile image capture is the lack of a slit-lamp device for assessing individual corneal layers and the anterior chamber, limiting the precision of diagnosis of corneal pathology and of cell and flare diagnostic of uveitis. Automated mobile eye diagnosis will require hardware or software that allows for large-field image capture of the fundus and visualization of the corneal layers and the anterior chamber.

Image Recognition and Interpretation

Following image capture, automated mobile diagnosis requires an interpretation system that detects multiple features of the anterior and posterior segments. The ideal system would recognize atypical color, contrast, shape, and size of all visible components of the eye. Anteriorly, it would be able to distinguish the lids from the lashes, sclera, conjunctiva, limbus, iris, cornea, pupil, and lens, and posteriorly, it should be able to distinguish between the optic disc, macula, vascular arcades, and peripheral retina. After recognizing the component parts of the eye, so called “segmentation”, the system should then be able to label the type of abnormality and its location within the anatomy of the eye. Lastly, for a system to expand healthcare delivery and access, it would need to provide some level of instruction. On the simplest level, this could involve determining if a patient should be referred to an ophthalmologist or screened again at a later date. As we will discuss, significant progress has already been made in accomplishing this.

One method of expanding access to specialty care is to build tools that enable primary care providers to make decisions that typically require training in a medical specialty. One strategy is creating systems that eliminate prior knowledge as a prerequisite for diagnosis. For example, the identification of a skin lesion usually requires the clinician to have studied the presentation, shape, color, texture and location of various skin lesions as well as have a sense of disease variation and overlap. VisualDx is a subscription-based website that walks users through step-by-step visual diagnosis of dermatologic conditions, including some overlap with ophthalmologic diagnoses. Three other similar sites include gonioscopy.org, oculonco.com, and ophthalmicedge.org.24–26

Peek Vision’s software automates one component of image recognition, optic cup:disc ratio calculation, important for diagnosis of glaucomatous optic neuropathy.20 Additionally, a team has automated the quantification of the number, morphology, and reflective properties of drusen based on spectral domain-optical coherence tomography.21

An alternative solution is crowdsourcing. In two studies, researchers demonstrated the utility of crowdsourcing of untrained people looking at retina images in automated diagnosis.28–30 However, variation in human interpretation, the number and experience of reviewers prevent its application.

Several research groups are developing algorithms for automated diagnosis. The most developed application of these algorithms is in the area of diabetic retinopathy screening in which many proposed algorithms reach sensitivity and specificity percentiles in the 90s.23 One of the most published algorithms is the Iowa Detection System, that has as its input a retina color photograph, and as its output, a number between 0 and 1. The closer the output is to 1, the more likely the patient has a stage of diabetic retinopathy that should be referred to an ophthalmologist or that the photograph is of insufficient quality to determine stage of diabetic retinopathy.22 It database includes numerous retinal images of racially diverse individuals taken using different camera types, and the Iowa Detection System been found to perform comparably to retina specialists.16 The best algorithm, however, remains a debatable issue as the algorithms are tested against human interpreters and with limited datasets where the gold standard is determined by another human interpreter or consensus of interpreters.23 Development of an algorithm capable of classifying disease outside of one spectrum of disease or of identifying the clinical significance of retinal findings remain an area of active research. We are living amidst a turning point in computing that has already revolutionized the field of computer vision and is set to change medical imaging.

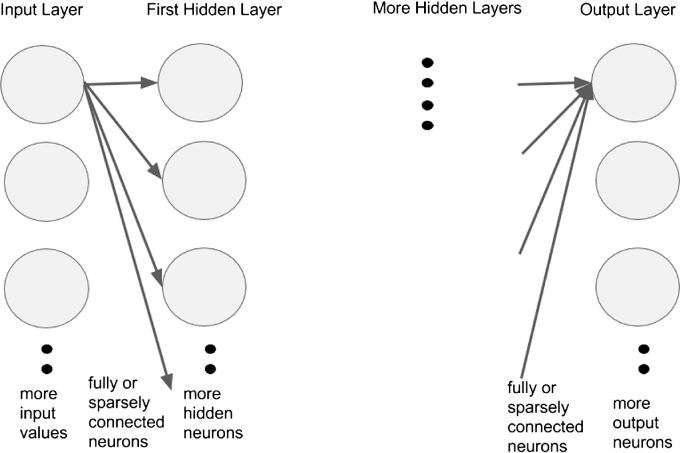

Neural networks improve upon the algorithms for automated image recognition and interpretation. Neural networks are biologically inspired algorithms that learn to approximate a function (Figure 2). Their inputs can be composed of text, numbers or images. They contain interconnected layers of functions called “neurons” that receive inputs from neurons in the layer adjacent to themselves and pass their outputs to the next layer of neurons. The ultimate function that the neural network approximates is encoded in the strength of connection between neurons. These connections are fine tuned by training the neural network with correct inputs and outputs. For example, a retinal photograph with the correct diagnosis as the output. Neural networks have been in development since the 1940s, and many research groups have already applied these algorithms to interpretation of ophthalmic images.18,19 However recent advances in the availability of high performance computing hardware has made feasible the construction and training of neural networks that contain several layers, various schemes of interconnectedness, and several neurons in each layer. Due to the repeated stacking of many layers of neurons, this form of computation has been termed “deep learning” and lies at the core of artificial intelligence software such as Google self driving cars (Google Inc., Mountain View, CA, USA), voice recognition in phones, and Facebook facial recognition (Facebook Inc., Palo Alto, CA, USA).

Figure 2:

General Architecture of Neural Network. General architecture of neural network with arbitrary number of neurons in each layer, number of layers, different schemes of interconnection between neurons in one layer and neurons in adjacent layer. It is fully connected, meaning that every neuron in a layer is connected to every neuron in its adjacent layer. Inputs are values such as pixel values from an image, and layers can be a one-dimensional row of neurons such as in the example or a three-dimensional volume such as in convolutional neural networks.

Most algorithms proposed thus far for image diagnosis, even those that use neural networks, are composed of specific, coded features used in combination for image recognition. Ophthalmic diagnosis, however, is a complex task that takes into account the location of lesions, macroscopic and microscopic structural changes, and textures difficult to describe even by ophthalmologists. Deep learning, although flawed by drawbacks such as overfitting and need for large training sets, makes few prior assumptions about the features needed to recognize images. Deep learning learns features from the data with which it is trained. In the next five years, there will be a wave of literature describing the complex tasks these algorithms can perform in automated ophthalmic diagnosis.30,31

Integrative Diagnostics

Ultimately, all data points gathered need to be integrated into one mobile system for diagnosis, such as with artificial intelligence software. IBM Watson, made famous by its Jeopardy! win, is an example of software that can integrate evidence-based medical knowledge accurately and consistently for automated diagnosis.32 IBM Watson’s servers can process 500 gigabytes of information per second—the equivalent of 1 million books.32 IBM Watson’s conversion of this information into evidence-based algorithms for diagnosis would provide the most up-to-date diagnostic programming possible.

User-Friendly Mobile Platform

To deliver the final information to the patient and/or physician, a user-friendly interface between the mobile phone and user will be necessary. An example of such an interface is Modernizing Medicine’s electronic medical assistant (EMA) iPad application for ophthalmology, which integrates published healthcare information and provides physicians with treatment options and outcome measures.33,34 While a physician-mobile device interface may be useful in guiding treatment plans, a patient-directed interface may ultimately provide actionable steps for the patient to take before ever seeing a physician for treatment.

Discussion

The future of automated mobile eye diagnosis lies in improvements in each of the above components, particularly image recognition and interpretation and integrative diagnostics. With automated mobile eye diagnosis, patients will have faster access to information about their conditions to guide their next steps. Automated diagnosis will be a reliable, cost-effective, and accessible tool for individuals across demographics to gather ophthalmologic information necessary to understand and manage their conditions.

Benefits of Automated Eye Diagnosis

Given the increased availability of wireless networks and advancements in technology, automated eye diagnosis will prove to be more cost-effective, accessible, and reliable than specialist diagnoses.35,36 Automated diagnosis will have the highest yield in low-resource settings where both physical and economic barriers limit access to specialist diagnosis. In a 2007 study, researchers in Scotland found that automated grading of images for diabetic retinopathy reduced both the workload and associated costs of care.37 Automated grading for a cohort of 160,000 patients lowered the total cost of grading by 47% as compared to manual grading, a saving of US $0.25 per patient. Similar studies on automated diabetic retinopathy and cataracts services in Canada, US, and rural South Africa have also shown that early screening programs save programs between $1,206 and $3,900 per sight per year in diagnosis, treatment, and referrals.38–41 With this decrease in cost of automated eye diagnosis, the service can more easily spread to smaller clinics in rural areas for early detection and triage of eye pathology.

Additionally, unlike humans, computers can rapidly incorporate new scientific information into their algorithms. The processing power of IBM Watson, for example, is inevitably impossible for any human specialist.32 Furthermore, humans maintain various biases during diagnosis, such as anchoring bias (relying too heavily on the first piece of information given) and framing bias (being prejudiced based on the way a statement is phrased).36 Automated eye diagnostic programs can continuously add new information to their database and algorithms while avoiding biases to make decisions.

Drawbacks to Automated Eye Diagnosis

Potential drawbacks to automated eye diagnosis include initial costs as well as concerns about its reliability and sensitivity. The design phase involves initial investment in hardware for image capture, programming software, and program developers. Implementation requires distribution of hardware and software to the appropriate users. Maintenance entails ongoing costs for the programmers and personnel running the service. However, as mentioned previously with automated diabetic retinopathy and cataracts diagnosis, the savings can eventually trump the initial costs as screening services expand to more patients.37–41

The accuracy of every step of automated eye diagnosis is critical to its success. In the Scotland diabetic retinopathy study, automated grading of 14,406 images from 6,722 patients missed three more cases of referable disease compared with manual grading.37 However, these were non-sight-threatening maculopathy instead of referable or proliferative retinopathy. Additionally, in 2010, researchers found that the algorithm for automated detection of diabetic retinopathy lagged only slightly behind the sensitivity and specificity of retinal specialists.37 As algorithms improve and are conducted on larger datasets, we believe that automation will outperform experts in sensitivity and specificity.

Lastly, the transmission of patient information over mobile devices necessitates strict and established protocols in patient consent and personal health information security. Software developers must always consider the security of patient information.

Next Steps

The first step in shifting the roles of ophthalmologists away from data collection will be training ancillary providers to collect the data necessary for diagnosis. These providers can then submit a digital representation of the information to software that gives instructions on treatment options and further testing, shifting the focus of ophthalmologists towards education, treatment planning, and treatment implementation.

Conclusion

We believe that automated mobile eye diagnosis using evidence-based algorithms will increase patient safety, improve access to ophthalmic services, and facilitate timely referrals. However, completely automated eye diagnosis will require improvements in image capture and recognition as well as automated integrative diagnostics. Once polished and integrated into greater medical practice, automated eye diagnosis has the potential to become a powerful tool to increase access to ophthalmic services worldwide.

Acknowledgements

The project described herein was conducted with support for Cassie A. Ludwig from the TL1 component of the Stanford Clinical and Translational Science Award to Spectrum (NIH TL1 TR 001084).

References

1. Ericsson. Ericsson Mobility Report 2015; http://www.ericsson.com/res/docs/2015/mobility-report/ericsson-mobility-report-nov-2015.pdf. (accessed 7 Dec 2015).

2. A survey of mobile phone usage by health professionals in the UK. 2010; http://www.d4.org.uk/research/survey-mobile-phone-use-health-professionals-UK.pdf. (accessed 7 Dec 2015).

3. Peek Vision. 2015; http://www.peekvision.org/. (accessed 20 Mar 2015).

4. DigiSight. 2014; https://www.digisight.net/digisight/index.php. (accessed 20 Mar 2015).

5. Opternative. 2015; https://www.opternative.com/. (accessed 20 Mar 2015).

6. EyeNetra. 2013; http://eyenetra.com/aboutus-company.html. (accessed 20 Mar 2015).

7. Image Analysis and Modeling in Ophthalmology. USA: CRC Press; 2014.

8. Haddock LJ, Kim DY, Mukai S. Simple inexpensive technique for high-quality smartphone fundus photography in human and animal eyes. 2013;2013:518479. ![]()

9. Myung A, Jais A, He L, Blumenkranz MS, Chang RT. 3D Printed Smartphone Indirect Lens Adapter for Rapid, High Quality Retinal Imaging. Journal of Mobile Technology in Medicine 2014;3(1):9–15. ![]()

10. Myung A, Jais A, He L, Chang RT. Simple, Low-Cost Smartphone Adapter for Rapid, High Quality Ocular Anterior Segment Imaging: A Photo Diary. Journal of Mobile Technology in Medicine 2014;3(1):2–8. ![]()

11. D-EYE. 2015; http://www.d-eyecare.com/. (accessed 7 Dec 2015).

12. Paxos Scope. 2014; https://www.digisight.net/digisight/paxos-scope.php. (accessed 7 Dec 2015).

13. WelchAllyn. iEXAMINER. 2015; http://www.welchallyn.com/en/microsites/iexaminer.html. (accessed 7 Dec 2015).

14. Darma S, Zantvoord F, Verbraak FD. The quality and usability of smartphone and hand-held fundus photography, compared to standard fundus photography. Acta ophthalmologica 2015;93(4):310–11. ![]()

15. Russo A, Morescalchi F, Costagliola C, Delcassi L, Semeraro F. Comparison of smartphone ophthalmoscopy with slit-lamp biomicroscopy for grading diabetic retinopathy. American journal of ophthalmology 2015;159(2):360–364.e361. ![]()

16. Abramoff MD, Niemeijer M, Russell SR. Automated detection of diabetic retinopathy: barriers to translation into clinical practice. Expert review of medical devices 2010;7(2):287–296. ![]()

17. Maamari RN, Keenan JD, Fletcher DA, Margolis TP. A mobile phone-based retinal camera for portable wide field imaging. The British journal of ophthalmology 2014;98(4):438–441. ![]()

18. Gardner GG, Keating D, Williamson TH, Elliott AT. Automatic detection of diabetic retinopathy using an artificial neural network: a screening tool. The British journal of ophthalmology 1996;80(11):940–944. ![]()

19. Yun WL, Acharya UR, Venkatesh YV, et al. Identification of different stages of diabetic retinopathy using retinal optical images. Information Sciences 2008;178(1):106–121. ![]()

20. Giardini ME, Livingstone IA, Jordan S, et al. A smartphone based ophthalmoscope. Conference proceedings: … Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual Conference 2014;2014:2177–2180. ![]()

21. de Sisternes L, Simon N, Tibshirani R, Leng T, Rubin DL. Quantitative SD-OCT imaging biomarkers as indicators of age-related macular degeneration progression. Investigative ophthalmology & visual science 2014;55(11):7093–7103. ![]()

22. Hansen MB, Abràmoff MD, Folk JC, et al. Results of Automated Retinal Image Analysis for Detection of Diabetic Retinopathy from the Nakuru Study, Kenya. PloS one 2015;10(10). ![]()

23. Mookiah MR, Acharya UR, Chua CK, et al. Computer-aided diagnosis of diabetic retinopathy: A review. Computers in biology and medicine 2013;43(12):2136–55. ![]()

24. VisualDx. 2015; http://www.visualdx.com/. (accessed 20 Mar 2015).

25. WLM A. Gonioscopy.org. 2015; http://gonioscopy.org/. (accessed 20 Mar 20).

26. B D. Oculonco. 2015; http://www.oculonco.com/. (accessed 20 Mar 2015).

27. OphthalmicEdge. 2014; ophthalmicedge.org (accessed 20 Mar 2015).

28. Mitry D, Peto T, Hayat S, et al. Crowdsourcing as a novel technique for retinal fundus photography classification: analysis of images in the EPIC Norfolk cohort on behalf of the UK Biobank Eye and Vision Consortium. PloS one 2013;8(8):71154. ![]()

29. Brady CJ, Villanti AC, Pearson JL, et al. Rapid grading of fundus photographs for diabetic retinopathy using crowdsourcing. Journal of medical Internet research 2014;16(10):e233. ![]()

30. Lim G, Lee ML, Hsu W, et al. Transformed Representations for Convolutional Neural Networks in Diabetic Retinopathy Screening. InWorkshops at the Twenty-Eighth AAAI Conference on Artificial Intelligence 2014 Jun 18.

31. Krizhevsky A, Sutskever I, Hinton G. Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems 2012;25:1106–14. ![]()

32. IBM Watson. 2015; http://www.ibm.com/smarterplanet/us/en/ibmwatson/. (accessed 20 Mar 2015).

33. Dattoli K CJ. IBM Reveals New Companies Developing Watson-Powered Apps: Healthcare, Retail and Financial Services Firms To Debut Mobile Applications Later This Year. 2014. http://www-03.ibm.com/press/us/en/pressrelease/43936.wss. (accessed 20 Mar 2015).

34. Modernizing Medicine. 2014; https://www.modmed.com/. (accessed 20 Mar 2015).

35. Hansmann U ML, Nicklous MS, Stober Th. Pervasive Computing: The Mobile World. 2 ed: Springer-Verlag Berlin Heidelberg; 2003.

36. Dilsizian SE, Siegel EL. Artificial intelligence in medicine and cardiac imaging: harnessing big data and advanced computing to provide personalized medical diagnosis and treatment. Current cardiology reports 2014;16(1):441. ![]()

37. Scotland GS, McNamee P, Philip S, et al. Cost-effectiveness of implementing automated grading within the national screening programme for diabetic retinopathy in Scotland. The British journal of ophthalmology 2007;91(11):1518–23. ![]()

38. Khan T, Bertram MY, Jina R, et al. Preventing diabetes blindness: cost effectiveness of a screening programme using digital non-mydriatic fundus photography for diabetic retinopathy in a primary health care setting in South Africa. Diabetes research and clinical practice. 2013;101(2):170–6. ![]()

39. Benbassat J, Polak BC. Reliability of screening methods for diabetic retinopathy. Diabetic medicine: a journal of the British Diabetic Association 2009;26(8):783–90. ![]()

40. Whited JD, Datta SK, Aiello LM, et al. A modeled economic analysis of a digital tele-ophthalmology system as used by three federal health care agencies for detecting proliferative diabetic retinopathy. Telemedicine journal and e-health: the official journal of the American Telemedicine Association 2005;11(6):641–651. ![]()

41. Li Z, Wu C, Olayiwola JN, Hilaire DS, Huang JJ. Telemedicine-based digital retinal imaging vs standard ophthalmologic evaluation for the assessment of diabetic retinopathy. Connecticut medicine 2012;76(2):85–90. ![]()